SGP

Propagating uncertainty in large datasets

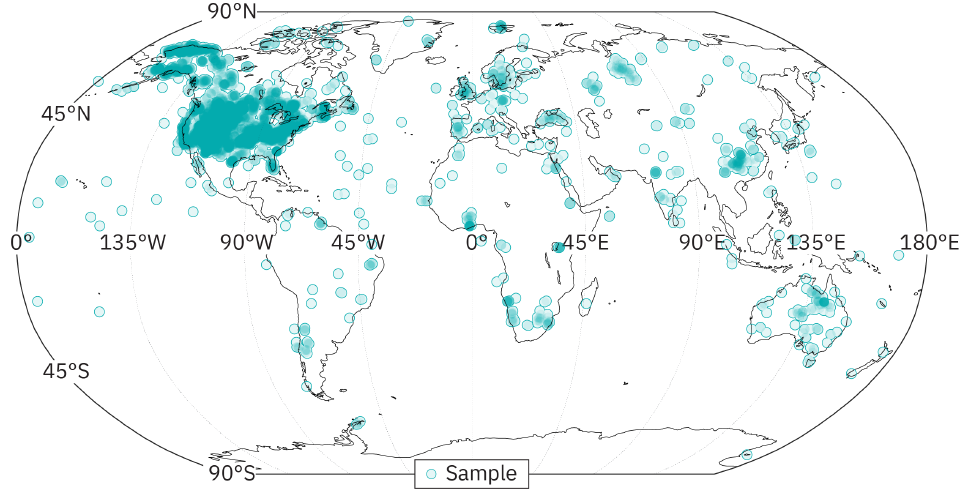

A map showing the locations of the approximately 85,000 samples that make up the SGP database. Note the uneven coverage—North America is much more densely sampled than Africa, for example.

Large, globally-sourced compilations of geologic observations can provide us with critical insights into a wide range of Earth surface processes. These datasets are made up of thousands of individual measurements, each with some degree of uncertainty, including temporal (i.e., when is it from?), and analytical (i.e., what is the measurement error?). These datasets also are heterogenous: samples are not evenly distributed with respect to time or space (see, for example, the above image).

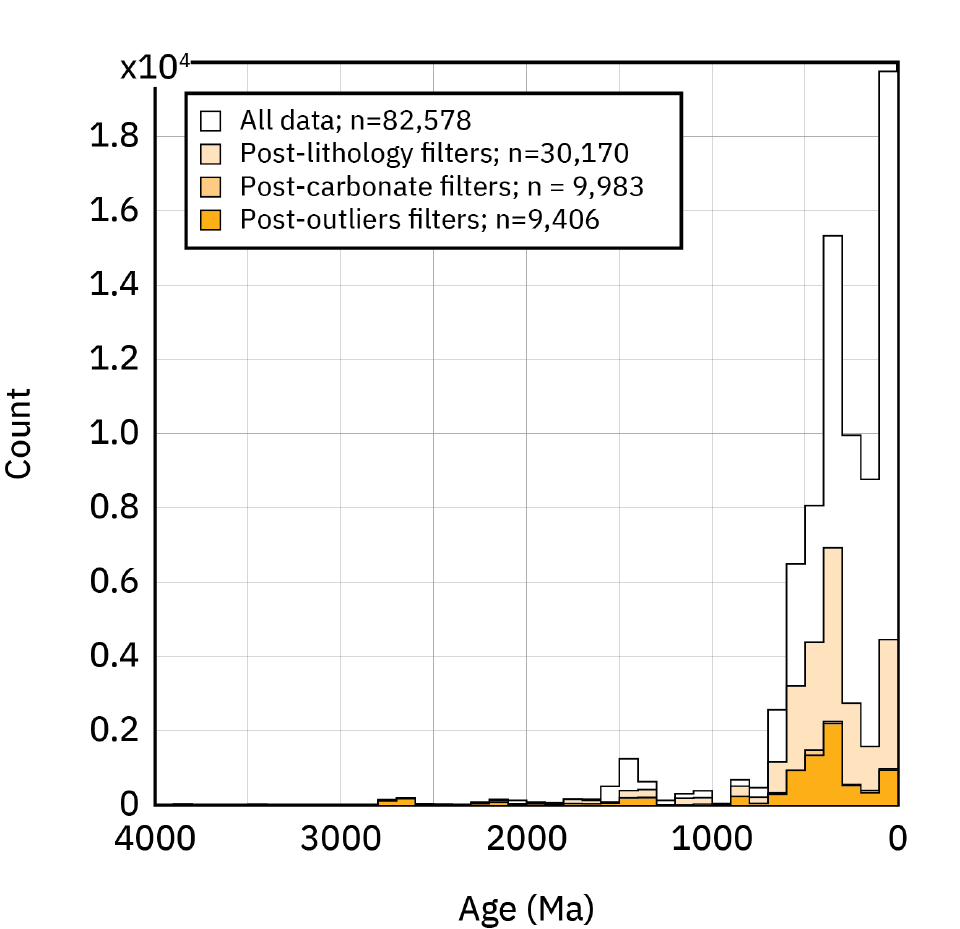

How can we extract meaningful trends—while propagating uncertainties—from these datasets? One solution is to use a combination of filtering and resampling. The former action allows us to target data that are meaningful to whatever problem we are trying to address. For example, if we are interested in figuring out how the geochemistry of fine-grained mudstones have changed through time, we really do not want to include measurements from carbonates. By filtering out the carbonate-specific data, we are able to produce a dataset that is more representative of our question. The latter technique enables us to incorporate uncertainty into our results. By repeatedly drawing from our data—using distributions that are defined by the uncertainties associated with each measurement—and then calculating the statistics of these drawn samples, we are able to estimate a range of values for a given property.

A histogram depicting the effects of progressive filtering on the SGP dataset. Samples were filtered by lithology, carbonate content, and outlier values.

[1] The Phase 1 SGP data product comprises approximately 85,000 samples, each with many different geochemical analytes, that are spread throughout time and space. | Return

Previous project: Namapoikia